Voice Acting for AI Pitfalls: Navigating Common Challenges

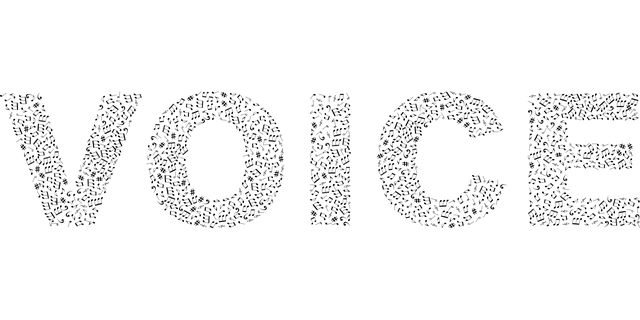

Voice acting for AI is growing fast. But, it has many pitfalls.

Voice acting for AI involves giving voices to digital assistants, games, and more. But it’s not always smooth sailing. There are several challenges that come with this unique field. From capturing the right tone to ensuring emotional depth, voice actors face many hurdles.

Some pitfalls include unnatural speech patterns, lack of emotional connection, and consistency issues. These can affect the user experience and the effectiveness of the AI. Understanding these pitfalls is crucial for anyone involved in voice acting for AI. Let’s dive into the key issues and how to navigate them.

Introduction To Ai Voice Acting

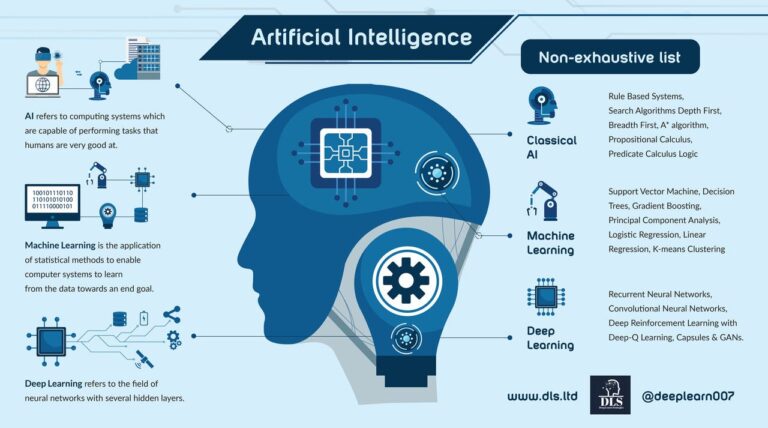

AI Voice Acting is an exciting field. It combines the art of voice acting with advanced artificial intelligence. This technology is changing how we interact with devices, applications, and services. Understanding its basics is essential for anyone interested in modern technology.

Evolution Of Voice Acting

Voice acting has evolved over the years. It started with radio dramas and cartoons. Actors used their voices to bring characters to life. This art has now extended to virtual assistants and AI systems. Today, AI can mimic human voices with great accuracy.

The progress in voice acting is impressive. Early attempts were robotic and lacked emotion. Modern AI can express different emotions and tones. This makes interactions more natural and engaging.

Importance In Ai

Voice acting in AI is crucial. It enhances user experience. People prefer interacting with systems that sound human. It makes the interaction feel personal and engaging.

AI voice acting is also important for accessibility. It helps people with disabilities use technology more easily. For example, visually impaired users can interact with devices through voice commands.

Businesses benefit too. Customer service can be improved with AI voice assistants. These systems can handle routine inquiries, freeing up human agents for complex tasks. This results in better customer satisfaction and efficiency.

Challenges And Pitfalls

Despite its benefits, AI voice acting has challenges. Creating natural-sounding voices is difficult. It requires vast amounts of data and sophisticated algorithms.

There are also ethical concerns. AI voices can be misused. They can create fake audio clips, leading to misinformation. Privacy is another issue. Voice data can be sensitive and needs proper protection.

Balancing these challenges is key to the future of AI voice acting. Developers must focus on improving the technology while addressing these concerns.

Common Challenges In Ai Voice Acting

Voice acting for AI has taken massive strides in recent years. Yet, it faces many challenges. These challenges impact the effectiveness and appeal of AI voices. Below, we explore some common issues in AI voice acting.

Voice Quality

AI voices often sound robotic. They lack the natural flow of human speech. This can make interactions feel less personal. Achieving high voice quality is essential. It requires advanced algorithms and extensive data.

Emotional Range

Emotions are hard to replicate. AI struggles with this. Human voice actors convey feelings naturally. AI lacks this depth. This limits its use in areas needing emotional connection.

Technical Limitations

Voice acting for AI has seen significant growth. Yet, there are some technical limitations. These limitations impact the quality and effectiveness of AI voice systems. Understanding these issues can help developers improve AI voice acting.

Data Collection Issues

One major technical limitation is data collection. AI systems need vast amounts of voice data. This data must be diverse and high-quality. Without it, the AI may not perform well. It may sound unnatural or robotic.

Collecting diverse voice data is challenging. It requires voices from different age groups, genders, and accents. Limited data leads to biased AI voices. These voices may not represent the diversity of real human speech.

Algorithm Constraints

Algorithm constraints also play a role. AI voice systems rely on complex algorithms. These algorithms process and generate speech. If the algorithms are not advanced, the voice quality suffers.

Current algorithms sometimes fail to capture human emotion. They may struggle with tone and inflection. This makes the AI voice sound flat or monotone. Developers continue to work on improving these algorithms. But progress is slow and requires extensive testing.

Credit: www.voiceproductions.com

Ethical Concerns

Voice acting for AI is an exciting field. But it has ethical concerns. The rapid development of voice technology brings new challenges. These challenges need careful thought and action.

Consent And Privacy

Consent and privacy are key issues. AI systems often use voice data without clear consent. This raises privacy concerns. People must know how their voice data is used.

Clear consent means people agree to share their voice data. This should be a transparent process. Users need to understand who has access to their data. They should also know how it will be used.

Without clear consent, privacy can be compromised. Voice data can reveal personal information. This includes age, gender, and emotional state. It’s important to protect this sensitive data.

Misuse Of Voice Data

Misuse of voice data is another ethical concern. Voice data can be used for unauthorized purposes. This can include creating fake audio clips or deepfakes. These can be harmful and misleading.

To prevent misuse, there should be strict regulations. Companies must ensure voice data is used ethically. This includes using secure systems to store and process data.

Misuse can harm individuals and society. It can lead to loss of trust in technology. Therefore, ethical guidelines are essential.

| Issue | Description |

|---|---|

| Consent and Privacy | Ensuring users know how their voice data is used |

| Misuse of Voice Data | Preventing unauthorized use of voice data |

Cultural Sensitivity

Voice acting for AI presents unique challenges. One critical aspect is cultural sensitivity. Ensuring respectful and accurate representation of different cultures is crucial. Missteps can lead to negative perceptions and user alienation.

Accent Representation

Accents play a vital role in voice acting. It’s important to represent accents authentically. Misrepresentation can offend users and seem disrespectful. For instance, a poorly executed British accent can sound inauthentic and distracting.

Consider hiring native speakers. They can provide accurate accent representation. It’s a way to ensure cultural respect and authenticity. Native speakers bring depth and credibility to their roles.

| Accent | Importance |

|---|---|

| British | Authenticity and cultural respect |

| American | Relatability for specific audiences |

| Australian | Representation of regional diversity |

Avoiding Stereotypes

Stereotypes can be harmful. They reduce cultures to narrow, often negative, traits. Avoiding stereotypes is essential for respectful voice acting. For instance, portraying all Italians as loud can be offensive.

Instead, focus on character depth. Build characters with diverse traits and backgrounds. This approach moves beyond stereotypes and fosters respect. It also enriches the user experience.

- Research cultural nuances

- Consult with cultural experts

- Create well-rounded characters

Incorporate these steps into your process. They can guide respectful and accurate cultural representation.

Credit: ilta.podbean.com

Improving Ai Voice Synthesis

Voice acting for AI has seen significant advancements. Yet, some challenges remain in creating natural-sounding voices. Enhancing AI voice synthesis involves refining algorithms and integrating human oversight. This ensures voices are both realistic and reliable.

Advanced Algorithms

Modern AI voice synthesis relies heavily on advanced algorithms. These algorithms analyze and replicate human speech patterns. They consider pitch, tone, and rhythm. This level of detail allows AI to produce more natural voices.

Deep learning plays a crucial role here. AI systems learn from vast datasets of human speech. This helps them mimic human intonation and emotion. As a result, the synthesized voice sounds more authentic.

| Element | Description |

|---|---|

| Pitch | Frequency of the voice |

| Tone | Quality and character of sound |

| Rhythm | Pattern of speech |

Human Oversight

Human oversight remains essential in AI voice synthesis. It ensures the final output aligns with human expectations. Experts review and fine-tune the AI-generated voices. This process helps identify nuances that algorithms might miss.

Feedback loops are vital. They involve constant human input to improve AI performance. This iterative process enhances the accuracy and authenticity of the voices. Human experts also help in training AI models with diverse speech samples.

Key Benefits of Human Oversight:

- Ensures quality and reliability

- Identifies and corrects errors

- Improves voice diversity and inclusivity

Case Studies

Voice acting for AI has seen rapid advancements. Companies across different sectors use AI voice actors. These case studies highlight successes and lessons learned.

Successful Implementations

Many companies have successfully implemented AI voice acting. One example is a customer service firm. They used AI voices to handle calls. This improved efficiency and reduced costs.

Another example is in the entertainment industry. A game developer used AI voice actors. This allowed for quick changes to dialogue. It also saved time and money.

Educational apps have also benefited. They use AI voices for interactive learning. This engages students and enhances their learning experience.

Lessons Learned

There are important lessons from these implementations. First, it’s vital to monitor the quality of AI voices. Poor quality can lead to user dissatisfaction.

Second, ensure the AI voices are inclusive. They should cater to diverse accents and languages. This ensures a wider reach.

Finally, balance AI with human touch. AI voices should not replace human interaction completely. Human oversight ensures better user experience.

Credit: www.youtube.com

Future Of Voice Acting In Ai

The future of voice acting in AI is a topic of great interest. With advancements in technology, AI voice acting is set to transform many industries. This evolution brings exciting possibilities and potential challenges. Let’s explore how technology is shaping the future of AI voice acting.

Technological Advancements

Advancements in AI and machine learning are driving changes in voice acting. AI can now mimic human voices with remarkable accuracy. This is due to deep learning models which analyze speech patterns and tones. As a result, AI can produce more natural-sounding voices.

Another key development is text-to-speech (TTS) technology. TTS has improved significantly, making AI voices sound more human-like. This is crucial for applications requiring natural interaction. Furthermore, neural networks help improve voice synthesis. They enable AI to understand and replicate emotions in speech.

Here is a table summarizing technological advancements:

| Technology | Impact |

|---|---|

| Deep Learning Models | Enhanced voice accuracy |

| Text-to-Speech (TTS) | Natural-sounding voices |

| Neural Networks | Emotional replication |

Potential Applications

AI voice acting has many potential applications across various fields. In entertainment, AI can voice characters in video games and animations. This can reduce production costs and time. Customer service is another area where AI voices can be useful. They can handle inquiries and provide support in multiple languages.

Education can benefit from AI voice acting as well. AI can create interactive learning experiences for students. It can also assist in language learning by providing clear pronunciation examples. Additionally, accessibility is enhanced with AI voices. Visually impaired individuals can use AI to read text aloud.

Some potential applications include:

- Video game character voices

- Animated film dialogues

- Customer service support

- Interactive educational tools

- Accessibility for the visually impaired

The future of voice acting in AI is bright, with many exciting opportunities and advancements on the horizon.

Frequently Asked Questions

What Are Common Pitfalls In Ai Voice Acting?

Common pitfalls in AI voice acting include lack of emotion, unnatural intonation, and limited contextual understanding. These issues can lead to robotic or monotonous speech, reducing user engagement and satisfaction.

How To Improve Ai Voice Acting?

To improve AI voice acting, focus on advanced algorithms, emotional range, and contextual awareness. These enhancements can create more natural and engaging vocal performances.

Why Is Emotion Important In Ai Voice Acting?

Emotion is crucial in AI voice acting because it makes interactions feel human-like. It enhances user experience and engagement by adding personality and relatability.

Can Ai Voice Acting Replace Human Voice Actors?

AI voice acting can complement human voice actors but cannot fully replace them. Human actors provide unique emotional depth and creativity that AI currently struggles to replicate.

Conclusion

Voice acting for AI has significant challenges. Mispronunciations confuse users. Accents may cause misunderstandings. Emotional expressions often feel unnatural. Context comprehension is still lacking. These pitfalls affect user experience. Addressing these issues is crucial. Improved technology can help. Continuous testing and feedback are essential.

Voice actors play a key role. Better AI voice acting benefits everyone.